Semantic Tool Selection: Building Smarter AI Agents with Context-Aware Routing

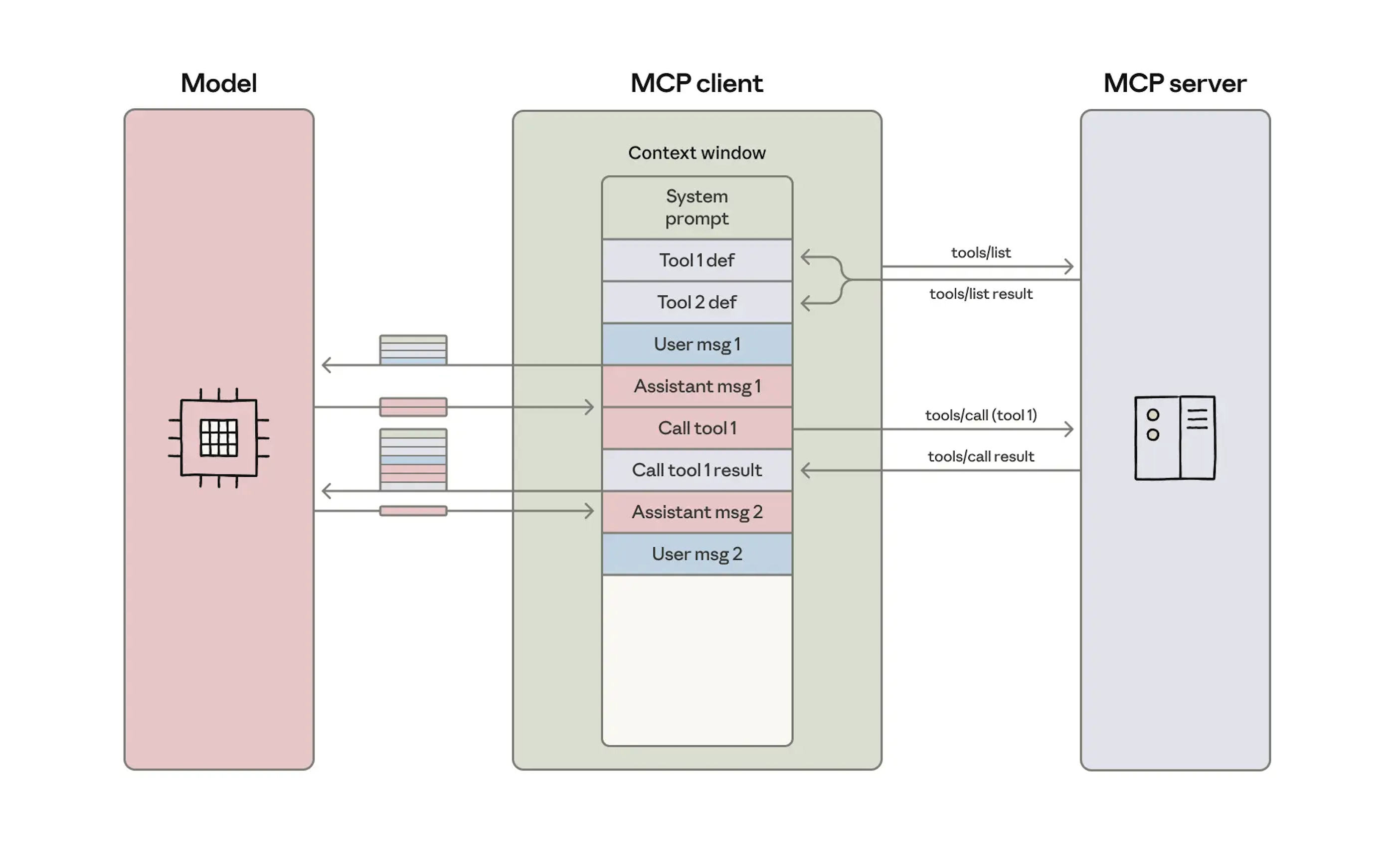

Anthropic recently published an insightful blog post on code execution with MCP, highlighting a critical challenge in modern AI systems: as agents connect to more tools, loading all tool definitions upfront becomes increasingly inefficient. Their solution—using code execution to load tools on-demand—demonstrates how established software engineering patterns can dramatically improve agent efficiency.

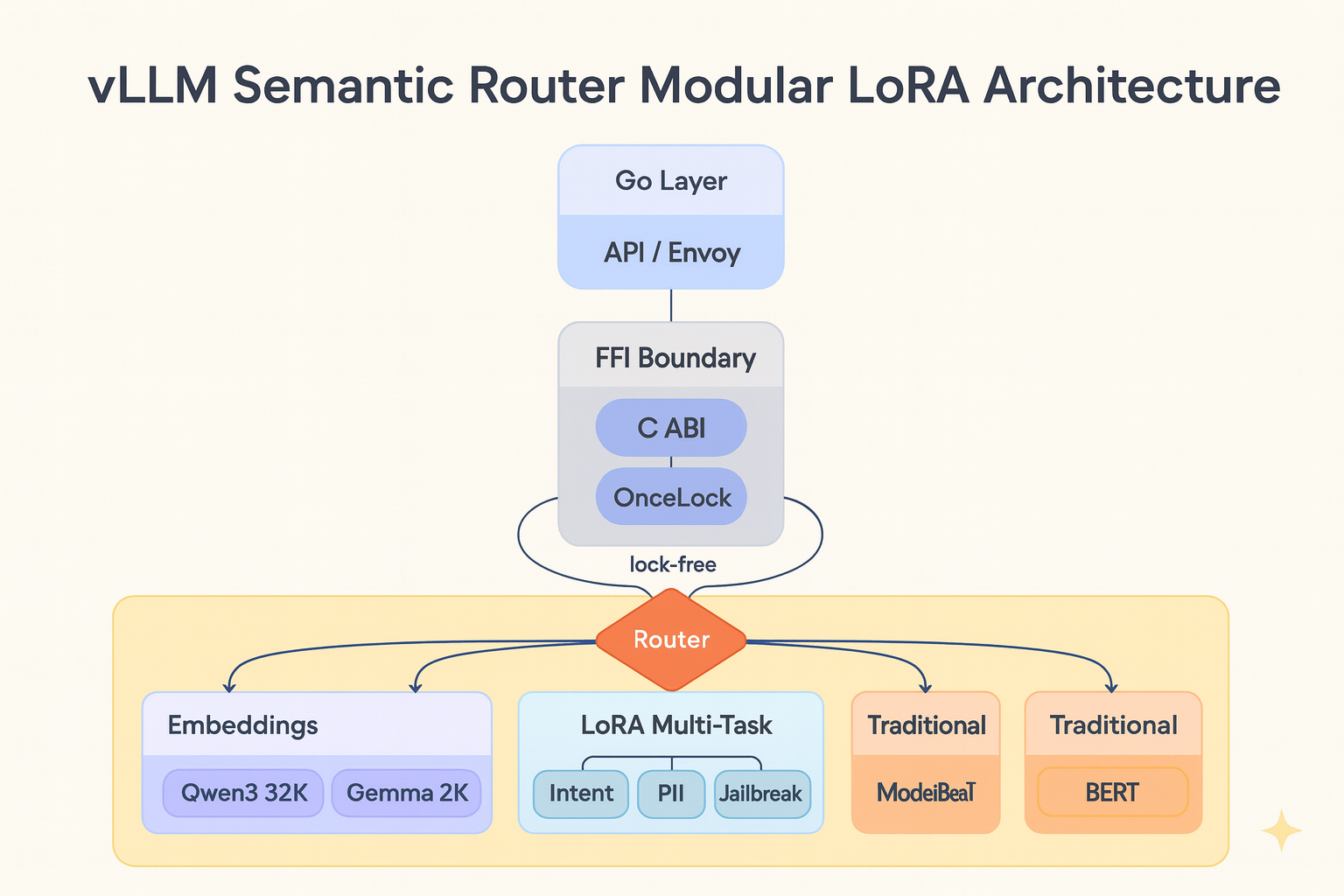

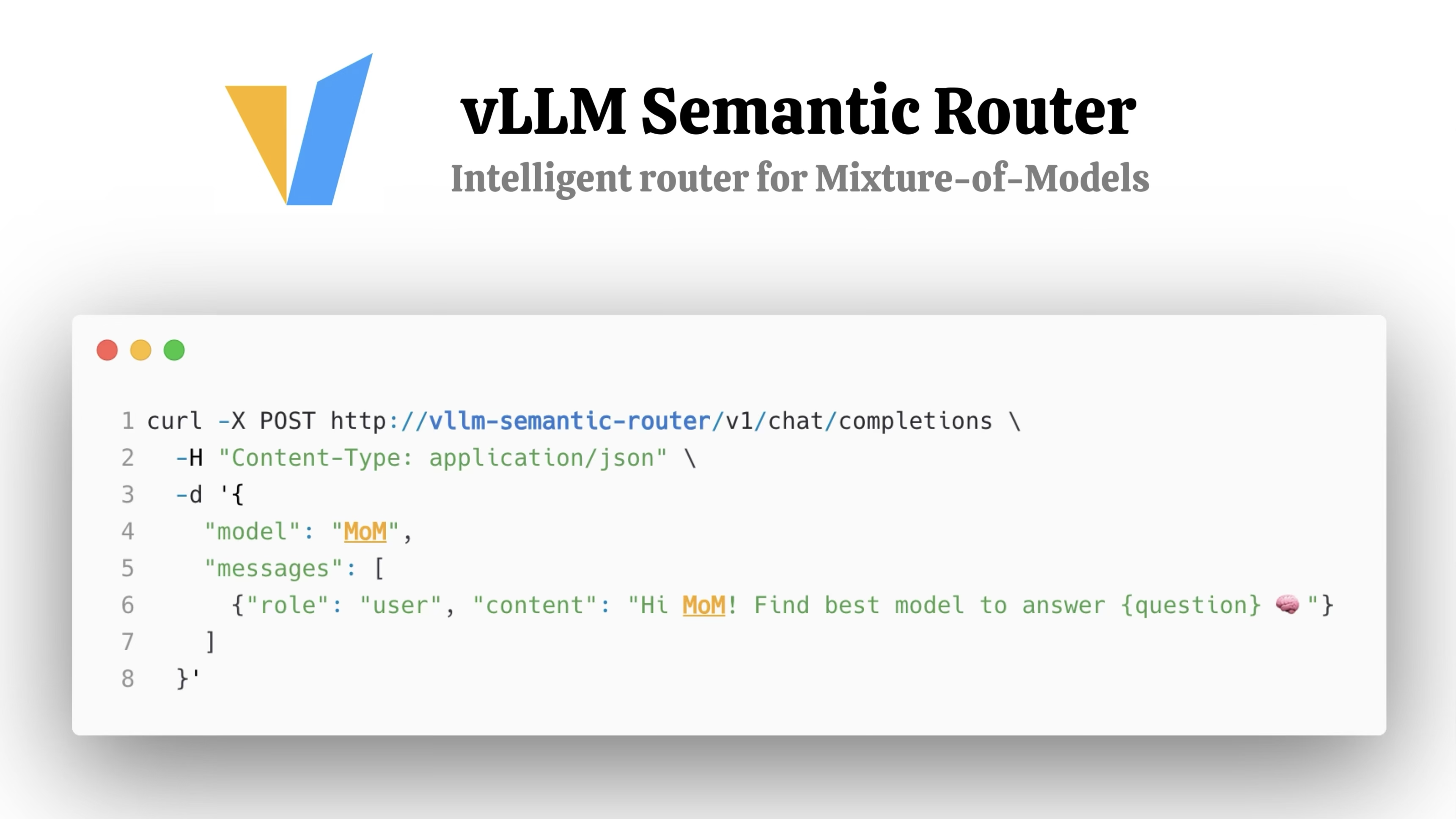

This resonates deeply with our experience building the vLLM Semantic Router. We've observed the same problem from a different angle: when AI agents have access to hundreds or thousands of tools, how do they know which tools are relevant for a given task?

Our solution: semantic tool selection—using semantic similarity to automatically select the most relevant tools for each user query before the request even reaches the LLM.